El Reg: “DataCore had a terrific year in 2013, breaking the 10,000 customer site number. It's a solidly successful supplier with a technology architecture that's enabled it and its customers to take advantage of industry standard server advances and storage architecture changes gracefully. 2014 should be no different with DataCore being stronger still by the end of it.”

Article: Future storage tech should KILL all-in-one solutions

El Reg had a conversation with DataCore president, CEO and co-founder George Teixeira about what’s likely to happen in 2014 with DataCore. Software-defined storage represents a trend that his company is well-positioned to take advantage of and he reckons DataCore could soar this year.

El Reg: How would you describe 2013 for DataCore?

George Teixeira: 2013 was the ‘tip of the iceberg’, in terms of the increasing complexity and the forces in play disrupting the old storage model, which has opened the market up. As a result, DataCore is positioned to have a breakout year in 2014 … Our momentum surged forward as we surpassed 25,000 license deployments at more than 10,000 customer sites [last year].

What’s more, the EMC ViPR announcement showcased the degree of industry disruption. It conceded that commoditisation and the movement to software-defined storage are inevitable. It was the exclamation point that the traditional storage model is broken.

El Reg: What are the major trends affecting DataCore and its customers in 2014?

George Teixeira: Storage got complicated as flash technologies emerged for performance, while SANs continued to optimise utilisation and management - two completely contradictory trends. Add cloud storage to the mix and all together, it has forced a redefinition of the scope and flexibility required by storage architectures. Moreover, the complexity and need to reconcile these contradictions put automation and management at the forefront, thus software.

A new refresh cycle is underway … the virtualisation revolution has made software the essential ingredient to raise productivity, increase utilisation, and extend the life of … current [IT] investments.

Last year set the tone. Sales were up in flash, commodity storage, and virtualization software. In contrast, look what happened to the expensive, higher margin system sales last year – they were down industry-wide. Businesses realized they no longer can afford inflexible and tactical hardware-defined models. Instead, they are increasingly applying their budget dollars to intelligent software that leverages existing investments and lower cost hardware – and less of it!

Server-side and flash technology for better application performance has taken off. The concept is simple. Keep the disks close to the applications, on the same server and add flash for even greater performance. Don’t go out over the wire to access storage for fear that network latency will slow down I/O response. Meanwhile, the storage networking and SAN supporters contend that server-side storage wastes resources and that flash is only needed for five percent of the real-world workloads, so there is no need to pay such premium prices. They argue it is better to centralize all your assets to get full utilization, consolidate expensive common services like back-ups and increase business productivity by making it easier to manage and readily shareable.

The major rub is obvious. It appears when local disk and flash storage resources, which improve performance, defeat the management and productivity gains from those same resources by unhooking them from being part of the centralised storage infrastructure. Software that can span these worlds appears to be the only way to reconcile the growing contradiction and close the gap. Hence the need for an all-inclusive software-defined storage architecture.

A software-defined storage architecture must manage, optimise and span all storage, whether located server-side or over storage networks. Both approaches make sense and need to be part of a modern software-defined architecture.

Why force users to choose? Our latest SANsymphony-V release allows both worlds to live in harmony since it can run on the server-side, on the SAN or both. Automation in software and auto-tiering across both worlds is just the beginning. Future architectures must take read and write paths, locality, cache and path optimizations and a hundred other factors into account and generally undermine the possibility of all-in-one solutions. A true ‘enterprise-wide’ software-defined storage architecture must work across multiple vendor offerings and across the varied mix and levels of RAM devices, flash technologies, spinning disks and even cloud storage.

El Reg: How will this drive DataCore's product (and service?) roadmap this year?

George Teixeira: The future is an increasingly complex, but hidden environment, which will not allow nor require much if any human intervention. This evolution is natural. Think about what one expects from today’s popular virtualization offerings and major applications, but exactly this type of sophistication and transparency. Why should storage be any different?

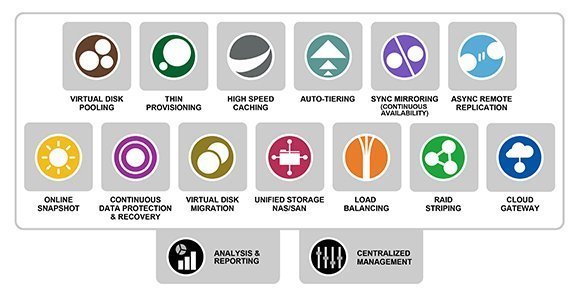

Unlike others who are talking roadmaps and promises, DataCore is already out front and has set the standard for software-defined storage as our software is in production at thousands of real world customer sites today. DataCore offers the most comprehensive and universal set of features and these features work across all the popular storage vendor brands, models of storage arrays and flash devices. Automating and optimizing the use of these devices no matter where they reside within servers or in storage networks.

DataCore will continue to focus on evolving its server-side capabilities, enhance the performance and productive use of in-memory technologies like DRAM, flash and new wave caching technologies across storage environments. [We'll take] automation to the next level.

DataCore already scales out to support up 16 node grid architectures and we expect to quadruple that number this year.

[We] will continue to abstract complexity away from the users and reduce mundane tasks through automation and self-adaptive technologies to increase productivity. ... For larger scale environments and private cloud deployments, there will be a number of enhancements in the areas of reporting, monitoring and storage domain capabilities to simplify management and optimise ‘enterprise-wide’ resource utilisation.

VSAN "opens the door without walking through it."

El Reg: How does DataCore view VMware's VSAN? Is this a storage resource it can use?

George Teixeira: Simply put, it opens the door without walking through it. It introduces virtual pooling capabilities for server-side storage that meets lower-end requirements while delivering promises of things to come for VMware-only environments. It sets the stage for DataCore to fullfil customers’ need to seamlessly build out a production class, enterprise-wide software-defined storage architecture.

It opens up many opportunities for DataCore for users who want to upscale. VSAN builds on DataCore concepts but is limited and just coming out of beta, whereas DataCore has a 9th generation platform in the marketplace.

Beyond VSAN, DataCore spans a composite world of storage running locally in servers, in storage networks, or in the cloud. Moreover, DataCore supports both physical and virtual storage for VMware, Microsoft Hyper-V, Citrix, Oracle, Linux, Apple, Netware, Unix and other diverse environments found in the real world.

Will you be cheeky enough to take a bite at EMC?

El Reg: How would you compare and contrast DataCore's technology with EMC's ScaleIO?

George Teixeira: We see ourselves as a much more comprehensive solution. We are a complete storage architecture. I think the better question is how will EMC differentiate its ScaleIO offerings from VMware VSAN solutions?

George Teixeira: Both are trying to address the server-side storage issues using flash technology, but again why have different software and separate islands or silos of management, one for the VMware world, one for the Microsoft Hyper-V world, one for the physical storage world, one for the SAN?

This looks like a divide and complicate approach when consolidation, automation and common management across all these worlds are the real answers for productivity. That is the DataCore approach. Instead, this silo approach of many offerings appears to be driven by the commercial requirements of EMC versus the real needs of the marketplace.

El Reg: Does DataCore envisage using an appliance model to deliver its software?

George Teixeira: Do we envisage an appliance delivery model? Yes, in fact, it already exists. We work with Fujitsu, Dell and a number of system builders to package integrated software/hardware bundles. We are rolling out the new Fujitsu DataCore SVA storage virtualization appliance platform within Europe this quarter.

We also have the DataCore appliance builder program in place targeting the system builder community and have a number of partners including Synnex Hyve Solutions which provides virtual storage appliances using Dell, HP or Supermicro Appliances Powered by DataCore and Fusion-io.

El Reg: How will DataCore take advantage of flash in servers used as storage memory? I'm thinking of the SanDisk/SMART ULLtraDIMM used in IBM's X6 server and Micron's coming NVDIMM technology.

George Teixeira: We are already positioned to do so. New hybrid NVDIMM type technology is appealing. It sits closer to the CPU and promises flash speeds without some of the negatives. But it is just one of the many innovations yet to come.

Technology will continue to blur the line between memory and disk technologies going forward, especially in the race for faster application response times by getting closer to the CPU and central RAM.

Unlike others who will have to do a lot of hard coding, and suffer the delays and reversals of naivety trying to keep up with new innovations, DataCore learned from experience and designed early on software to rapidly absorb new technology and make it work. Bring on these types of innovations; we are ready to make the most of them.

El Reg: Does DataCore believe object storage technology could be a worthwhile addition to its feature set?

George Teixeira: Is object storage worthwhile? Yes, we are investing R&D and building out our OpenStack capabilities as part of our object storage strategy; other interfaces and standards are also underway. It is part of our software-defined storage platform, but it makes no sense to bet simply on the talk in the marketplace and on these emerging strategies exclusively.

The vast bulk of the marketplace is file and block storage capabilities and that is where we are primarily focused this year. Storage technology advances and evolves, but not necessarily in the way we direct it to, therefore object storage is one of the possibilities for the future along with much more relevant, near term advances that customers can benefit from today.

El Reg: DataCore had a terrific year in 2013, breaking the 10,000 customer site number. It's a solidly successful supplier with a technology architecture that's enabled it and its customers to take advantage of industry standard server advances and storage architecture changes gracefully. 2014 should be no different with DataCore being stronger still by the end of it. ®